via humanoid robots and wearables

ergoCub is a project that develops technologies to catalyze the necessary digital transformation to reduce the number of musculoskeletal diseases related to biomechanical risk in future workers. This objective is pursued by developing wearable technologies, humanoid robots, and artificial intelligence while monitoring the acceptability of these technologies.

IIT and INAIL, the Italian National Institute for Insurance against Accidents at Work, collaborate to achieve this result. INAIL provided 5 million euros over 3 years to design and build a new humanoid robot and combine it with our wearable technologies.

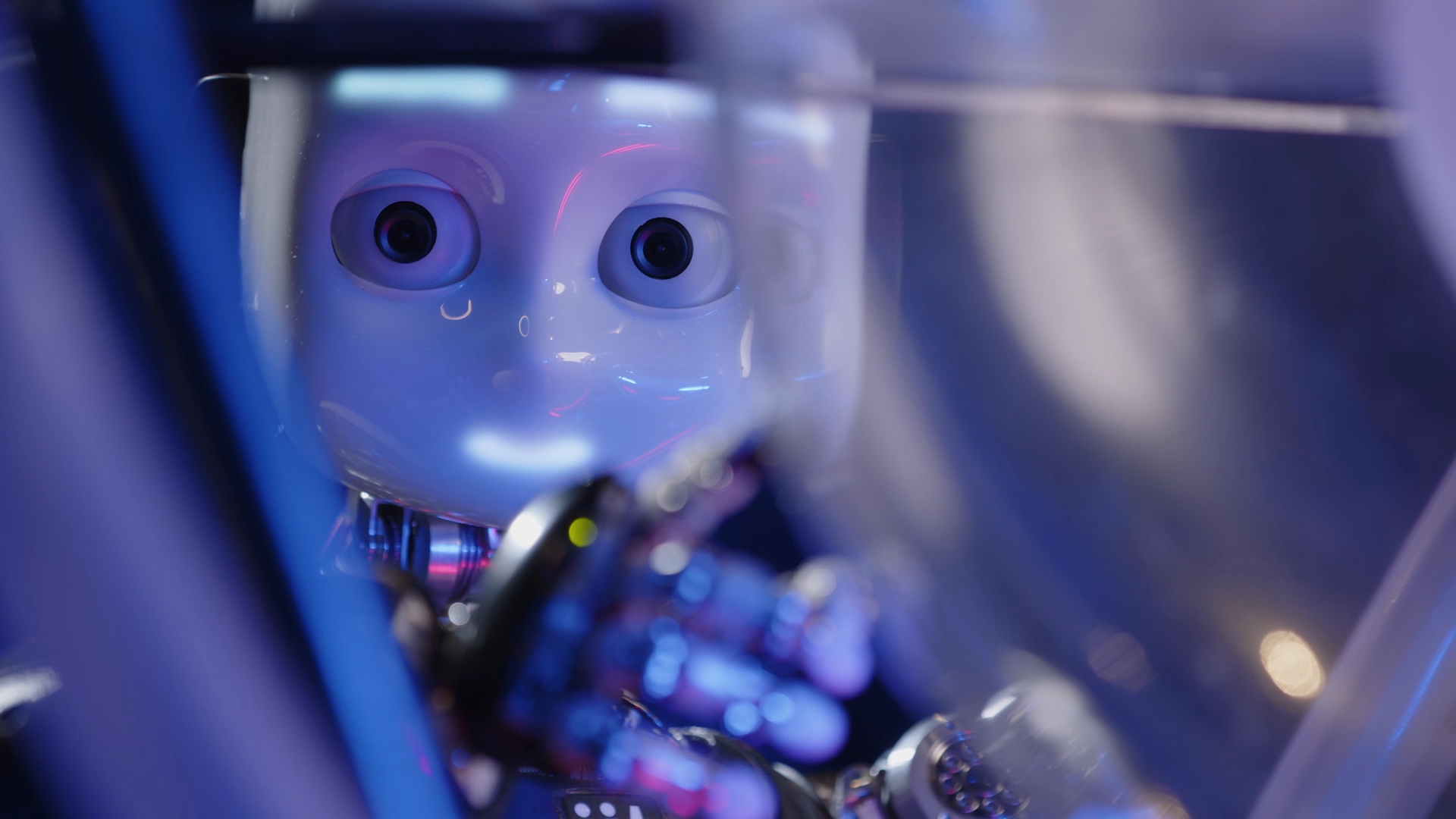

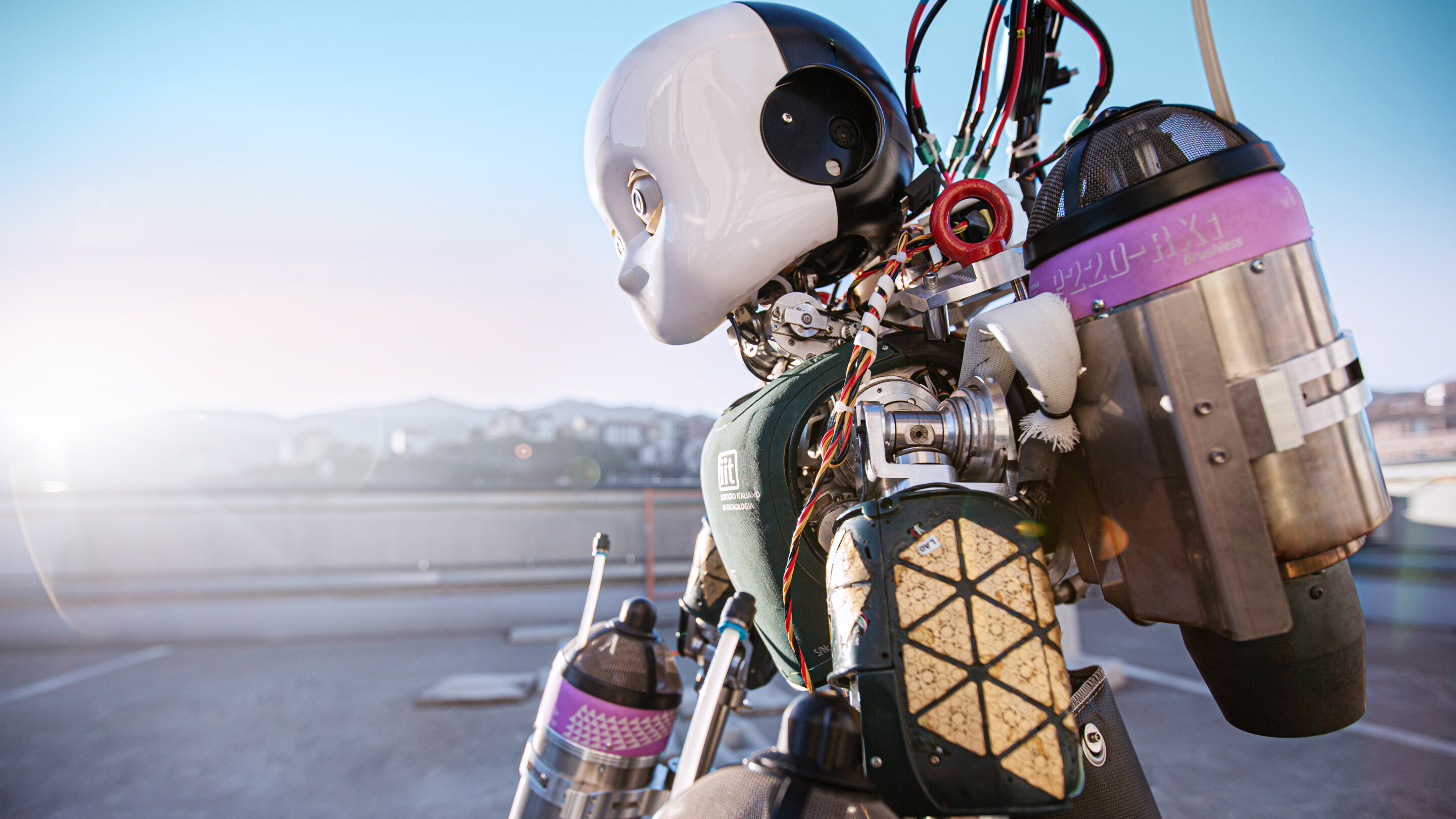

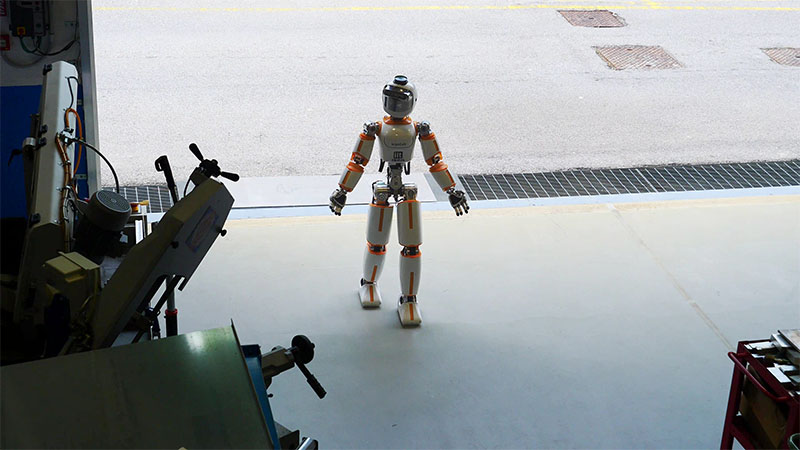

The ergoCub project has developed a humanoid robot called ergoCub, which is an evolution of the humanoid robot iCub with the principal objective of designing a robot suitable for physical collaboration tasks.

While wearable technologies are essential for monitoring and predicting biomechanical risk, they cannot reduce risk when a specific task is executed. Wearable technologies are fundamentally passive devices.

For instance, consider a weightlifting task; if the task is necessary and is performed, the worker will have to carry out the task even if monitored by wearable technologies. Therefore, fatigue-resistant machines are needed to handle the biomechanical risk of workers engaged in potentially hazardous tasks.

ergoCub specifics

The ergoCub humanoid robot, standing at 1.5m tall and weighing 55.7 kg, can carry loads of up to approximately 10 kg. It was designed considering ergonomic elements during the design phase: its geometry minimizes the energy consumption of human-robot joint effort during lifting tasks.

It incorporates an Intel RealSense camera for depth vision and Lidar for navigation, ensuring precise movement in diverse environments.

It is powered by Nvidia Xavier Jetson AGX and 11th generation Intel i7 and a flexible OLED 2K screen, offering expressive interactions. Including new-generation force-torque sensors enables the robot to respond intuitively to external forces.

A.I. for the Physical Intelligence

of the Robot

Collaborative Lifting Tasks for Risk Reduction

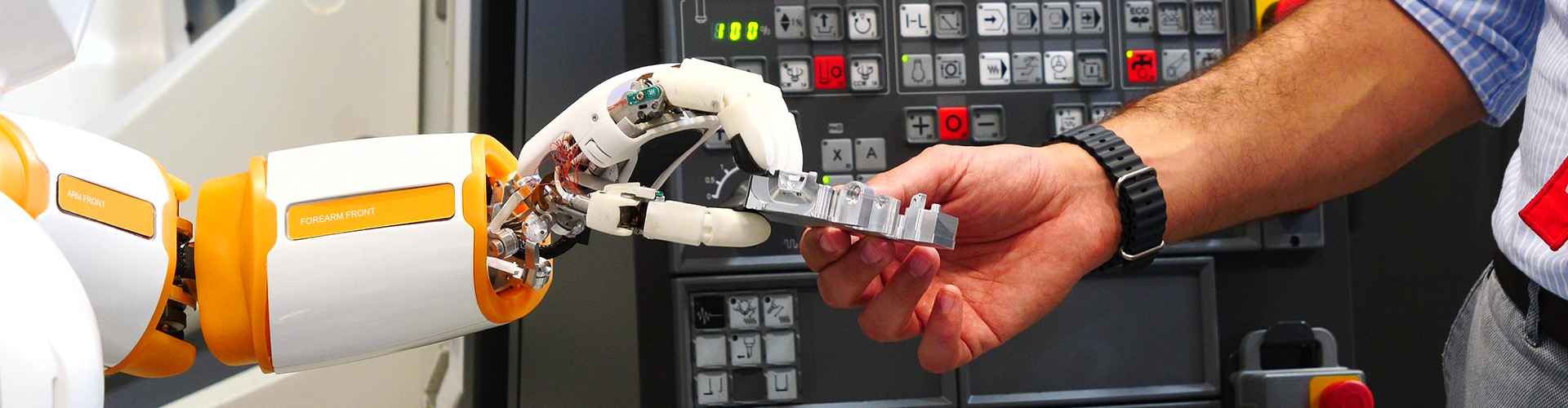

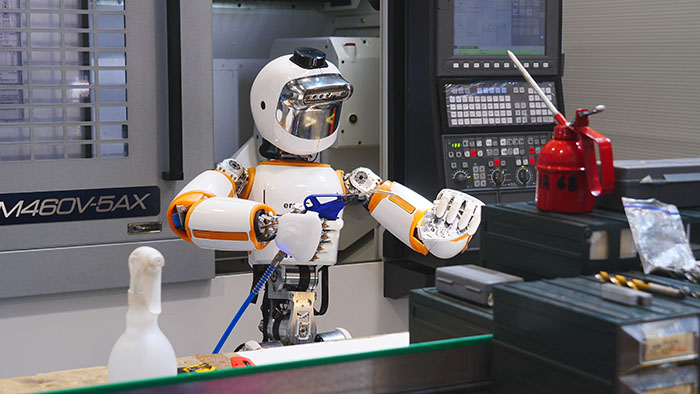

Control, planning, estimation, and A.I. techniques allow the ergoCub robot to perform lifting tasks in collaboration with a sensorized human. Particularly interesting is the lifting task, which becomes highly risky when performed without robot support, while collaboration with the robot reduces the overall risk during the task.

Locomotion

Another essential aspect the project has focused on is robot locomotion, such as walking. The ergoCub robot can currently walk at a speed similar to a human, which was impossible for its predecessor, iCub3.

Load Transportation

The ergoCub robot can walk while carrying heavy loads. Currently, the robot can transport several kilograms of load, but the goal is to work on the robot's artificial intelligence to increase this carrying capacity significantly.

Autonomous Navigation in a Simulated Warehouse

Based on the robot's sensors, A.I. components have been developed to enable it to locate itself in a warehouse and plan a path necessary for its movement. For example, the robot can plan a route between two warehouse shelves while avoiding unexpected obstacles, potentially optimizing the arrangement of objects inside the warehouse for workers' ergonomics.

Recognition of Workers' Intentions

To collaborate with workers, the ergoCub robot must be able to recognize their intentions and the shape and position of objects they interact with. For this purpose, A.I. vision modules have been developed for object recognition, localization, shape estimation, grip control, and manipulation.

Automatic Manipulation

To interact with people, algorithms have been developed to recognize human intentions, such as whether the person is delivering or receiving a load or wants to shake hands or greet the robot. The vision techniques developed in this context can be extended or complemented to integrate risk prevention algorithms using information from wearable sensors.

Application Scenarios

Healthcare

- Remotely monitor the health of people in real-time and improve rehabilitation processes;

- Assist health workers.

Industrial

- Improve industrial processes by minimizing the risk of injury from musculoskeletal disorders;

- Collaborative tasks with workers

Learn about our other projects