mediante robot umanoidi e dispositivi indossabili

ergoCub è un progetto che sviluppa tecnologie per catalizzare la trasformazione digitale necessaria a ridurre il numero di malattie muscoloscheletriche legate al rischio biomeccanico nei futuri lavoratori. Questo obiettivo viene perseguito sviluppando tecnologie indossabili, robot umanoidi e intelligenza artificiale e monitorando l'accettabilità di queste tecnologie.

IIT e INAIL, l'Istituto Nazionale per l'Assicurazione contro gli Infortuni sul Lavoro, collaborano per raggiungere questo risultato. L'INAIL ha stanziato 5 milioni di euro in 3 anni per progettare e costruire un nuovo robot umanoide e combinarlo con le nostre tecnologie indossabili.

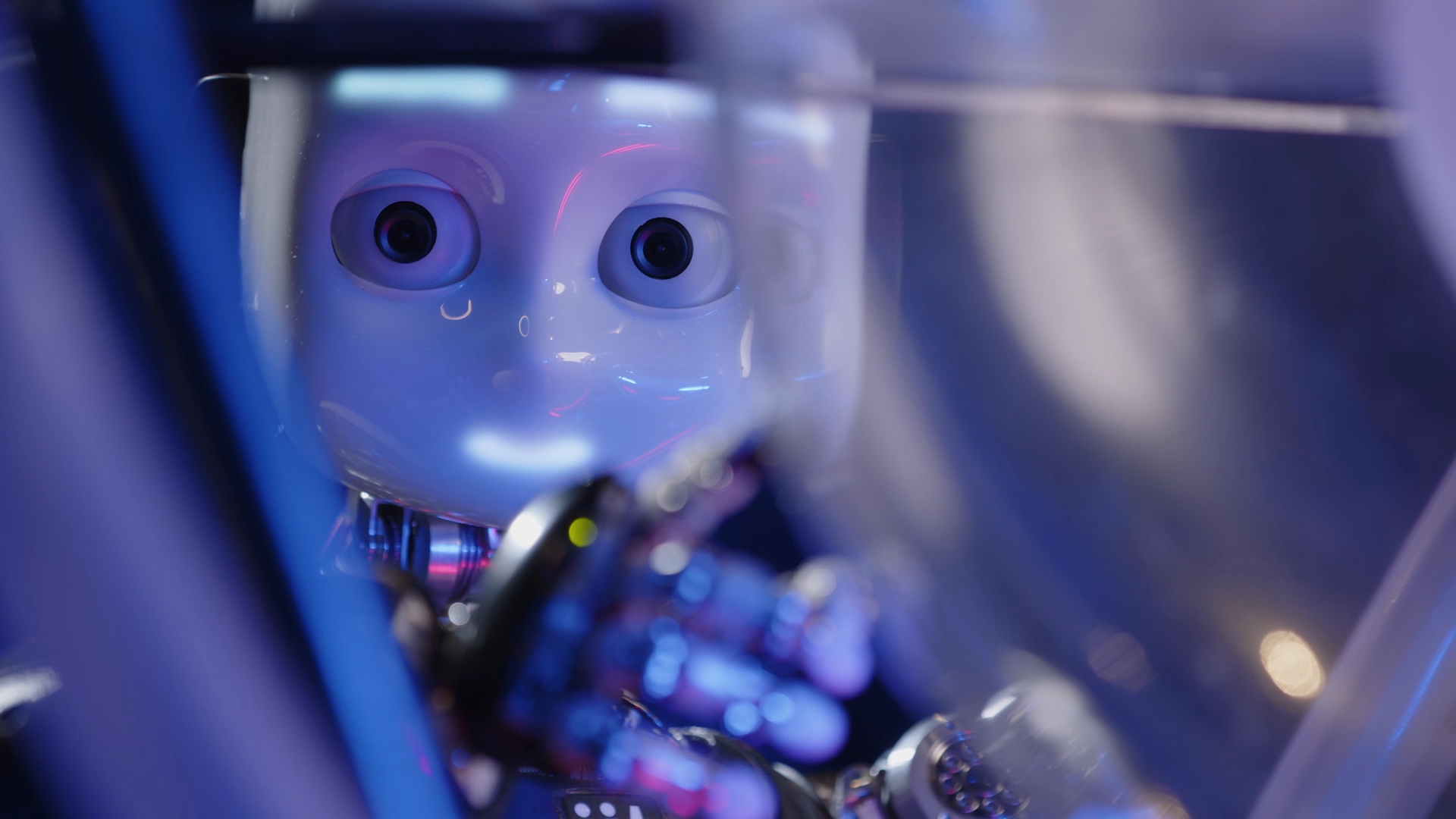

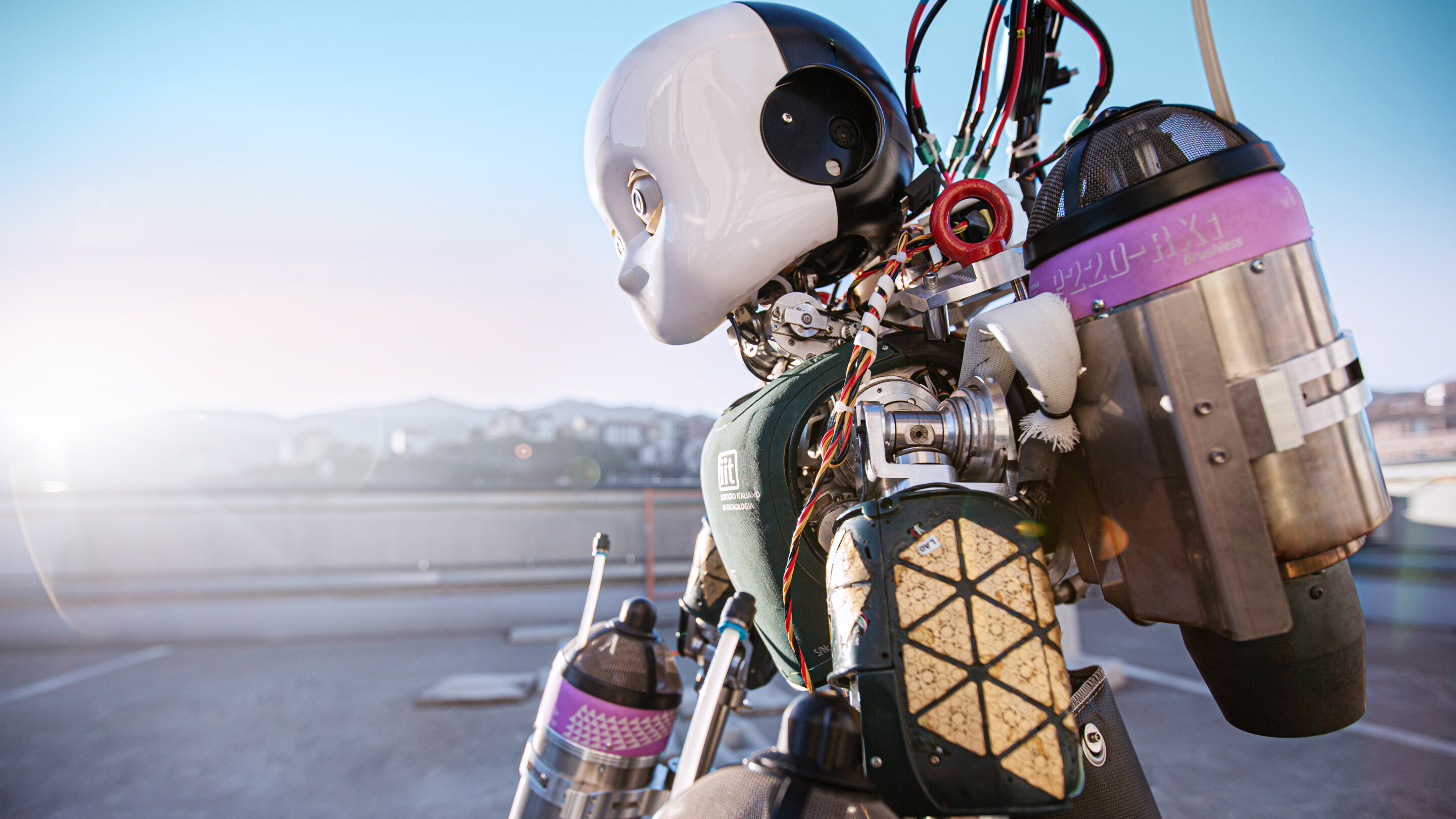

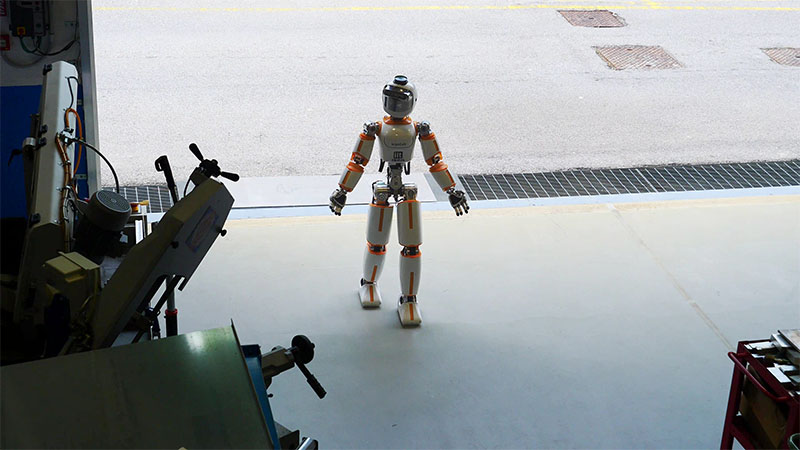

Il progetto ergoCub ha sviluppato un robot umanoide chiamato ergoCub, che è un'evoluzione del robot umanoide iCub con l'obiettivo principale di progettare un robot adatto a compiti di collaborazione fisica.

Sebbene le tecnologie indossabili siano essenziali per il monitoraggio e la previsione del rischio biomeccanico, non possono ridurre il rischio durante l'esecuzione di un compito specifico. Le tecnologie indossabili sono fondamentalmente dispositivi passivi.

Ad esempio, si consideri un'attività di sollevamento pesi; se l'attività è necessaria e viene eseguita, il lavoratore dovrà svolgerla anche se monitorato dalle tecnologie indossabili. Pertanto, sono necessarie macchine resistenti alla fatica per gestire il rischio biomeccanico dei lavoratori impegnati in attività potenzialmente pericolose.

Specifiche di ergoCub

Il robot umanoide ergoCub, alto 1,5 m e pesante 55,7 kg, può trasportare carichi fino a circa 10 kg. È stato progettato tenendo conto di elementi ergonomici durante la fase di progettazione: la sua geometria riduce al minimo il consumo energetico dello sforzo congiunto uomo-robot durante i compiti di sollevamento.

Incorpora una telecamera Intel RealSense per la visione di profondità e un Lidar per la navigazione, garantendo movimenti precisi in ambienti diversi.

È alimentato da Nvidia Xavier Jetson AGX e Intel i7 di 11a generazione e da uno schermo OLED 2K flessibile, che offre interazioni espressive. L'inclusione di sensori di forza-coppia di nuova generazione consente al robot di rispondere in modo intuitivo alle forze esterne.

I.A. per l'intelligenza fisica

del Robot

Attività di sollevamento in collaborazione per la riduzione dei rischi

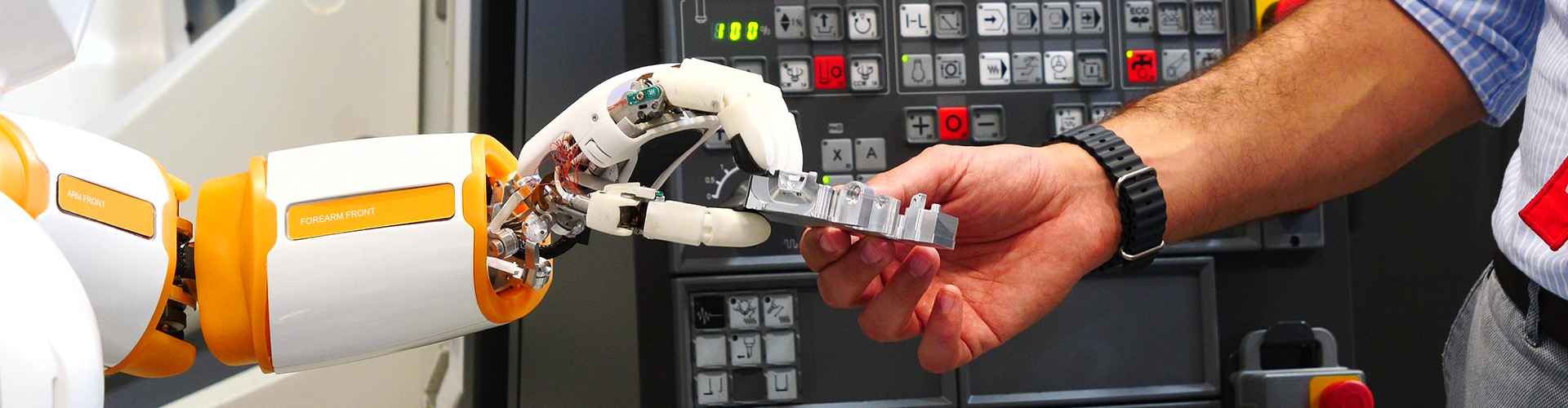

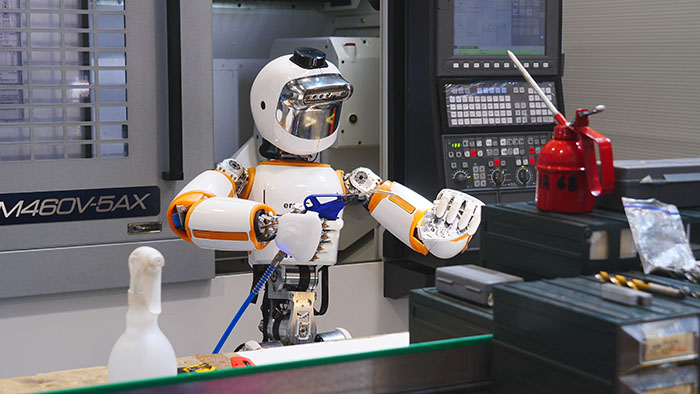

Tecniche di controllo, pianificazione, stima e I.A. consentono al robot ergoCub di eseguire compiti di sollevamento in collaborazione con un essere umano sensorizzato. Particolarmente interessante è il compito di sollevamento, che diventa altamente rischioso se eseguito senza il supporto del robot, mentre la collaborazione con il robot riduce il rischio complessivo durante il compito.

Locomozione

Un altro aspetto fondamentale su cui si è concentrato il progetto è la locomozione robotica, come la camminata. Il robot ergoCub è attualmente in grado di camminare a una velocità simile a quella umana, cosa che era impossibile per il suo predecessore, iCub3.

Trasporto di carichi

Il robot ergoCub può camminare trasportando carichi pesanti. Attualmente, il robot può trasportare diversi chilogrammi di carico, ma l'obiettivo è quello di lavorare sull'intelligenza artificiale del robot per aumentare significativamente questa capacità di trasporto.

Navigazione autonoma in un deposito simulato

In base ai sensori del robot, sono stati sviluppati componenti di intelligenza artificiale che gli consentono di localizzarsi in un magazzino e di pianificare il percorso necessario per il suo movimento. Ad esempio, il robot può pianificare un percorso tra due scaffali del magazzino evitando ostacoli imprevisti, ottimizzando potenzialmente la disposizione degli oggetti all'interno del magazzino per l'ergonomia dei lavoratori.

Riconoscimento delle intenzioni dei lavoratori

Per collaborare con i lavoratori, il robot ergoCub deve essere in grado di riconoscere le loro intenzioni e la forma e la posizione degli oggetti con cui interagiscono. A questo scopo sono stati sviluppati moduli di visione A.I. per il riconoscimento degli oggetti, la localizzazione, la stima della forma, il controllo della presa e la manipolazione.

Manipolazione automatica

Per interagire con le persone, sono stati sviluppati algoritmi per riconoscere le intenzioni umane, ad esempio se la persona sta consegnando o ricevendo un carico o se vuole stringere la mano o salutare il robot. Le tecniche di visione sviluppate in questo contesto possono essere estese o integrate per integrare algoritmi di prevenzione dei rischi utilizzando le informazioni provenienti da sensori indossabili.

Scenari applicativi

Sanità

- Monitorare a distanza la salute delle persone in tempo reale e migliorare i processi di riabilitazione;

- Assistere il personale sanitario.

Industriale

- Migliorare i processi industriali riducendo al minimo il rischio di lesioni da disturbi muscoloscheletrici;

- Compiti collaborativi con i lavoratori.

Scopri i nostri altri progetti